tl;dr

Large language models’ lack of predictability makes it risky to deploy generative AI-based applications for delivering mental health care

Approaches that instead use generative AI chatbots as conversational companions (not as therapeutics) make more sense given the maturity of our current AI tools

Unlike most small startups, big tech companies have the needed infrastructure, know-how, proprietary chat data, and built-in user bases to develop and distribute high-quality conversational companions

The woes of (AI) bots

The medical field has been developing artificial intelligence (AI) and machine learning (ML)-based tools as far back as the 1970s. In that decade, Stanford academics developed MYCIN, an algorithm that helped to identify bacteria that caused severe infections. MYCIN was able to propose an effective therapy 69% of the time – better than most infectious disease experts.1

While AI/ML tools have long been deployed in healthcare for some use cases (e.g., predicting patient outcomes or treatment efficacy, optimizing logistics such as operating room usage), research breakthroughs in neural networks and the corresponding development of surprisingly intelligible large language models (LLMs) has led to a frenzy of new activity. It seems like every company is talking about how to leverage these tools, and new startups building generative AI-enabled healthcare applications are suddenly ubiquitous. This is despite challenges such as the slow adoption of AI in healthcare to date, a lack of robust vetted healthcare-specific foundation models, and most startups’ lack of access to the large amounts of data needed to train these models.

Generative-AI based conversational agents (aka chatbots) are an application of LLMs that show tremendous promise across sectors, for use cases ranging from customer service to entertainment to care delivery. There are a plethora of healthcare startups building AI chatbots to support people with mental health care needs. Given the shortage of mental health care providers in the United States and elsewhere, I can see why the idea of automation in this space sounds appealing.

But chatbots for mental health care have received mixed reviews. John Oliver famously roasted Woebot, one of the earliest and best-known entrants in this space, for its inappropriate and potentially dangerous responses to users. (According to its website, Woebot has a team of writers that scripts the content the bot serves up to users, ensuring interactions remain appropriate). In contrast, some have found value from Woebot; Dhruv Khullar wrote in The New Yorker of his experience trying out the app, “I knew that I was talking to a computer, but in a way I didn’t mind. The app became a vehicle for me to articulate and examine my own thoughts. I was talking to myself.”

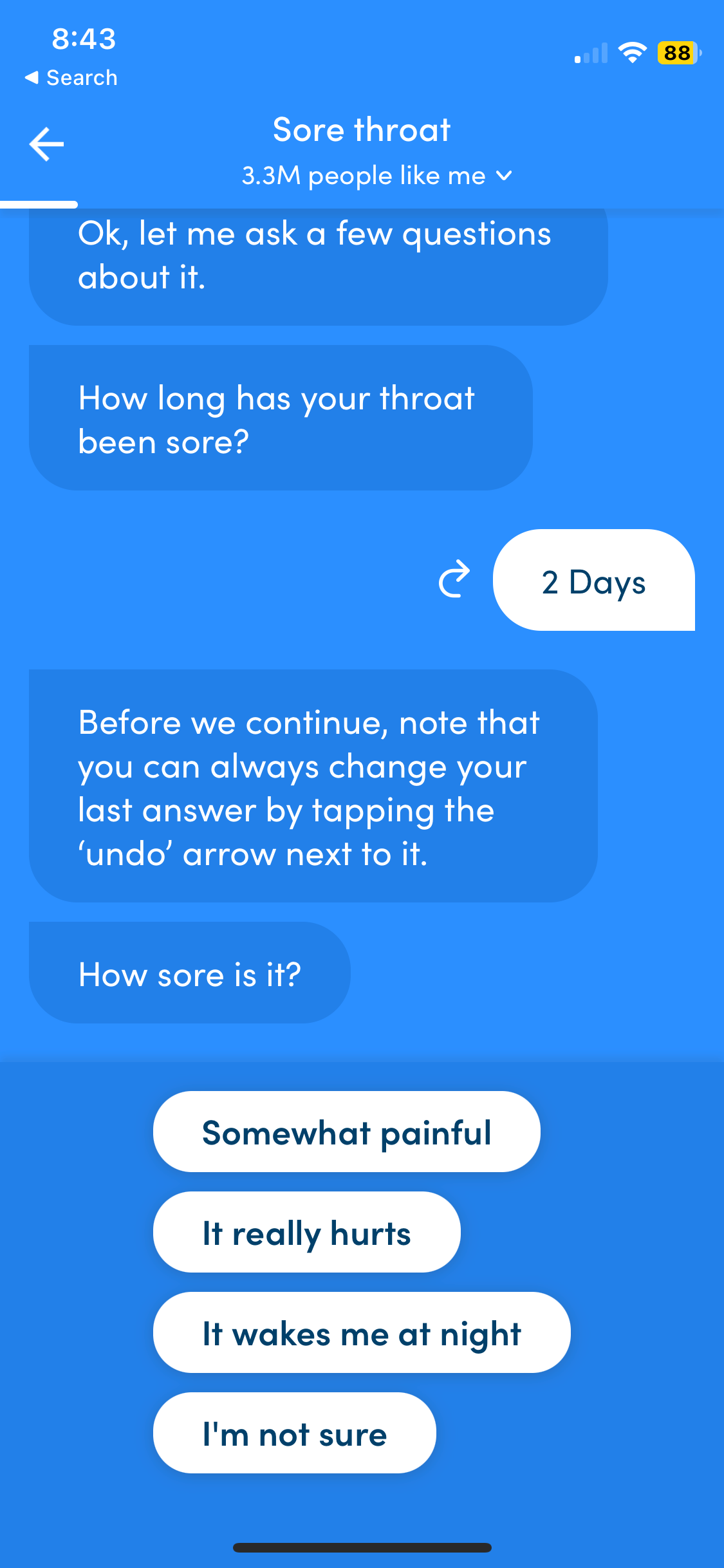

While apps like Woebot or the general primary care chatbot launched by K Health are heavily scripted and require users to mostly select from pre-generated text choices – more like a clinical decision tree than a true implementation of generative AI – a slew of new products that deploy LLMs in their consumer-facing experience come with a new set of risks. Given that LLMs have been known to provide non-factual and at times strange or inappropriate responses to users, a chatbot that relies on generative AI could potentially respond to a user in a way that might exacerbate their mental health condition and/or lead to self-harm.

Trying out the K Health app a few days ago

How chatbots work (a layperson’s primer on neural networks)

In a nutshell, generative AI-powered chatbots are applications built on top of LLMs, e.g., OpenAI’s ChatGPT, Anthropic’s Claude, etc. LLMs are based on neural networks – mathematical models developed loosely from our understanding of how neurons in the human brain work. (Stephen Wolfram has a great explainer of LLMs here). Whereas in the traditional paradigm of “software 1.0” programmers write lines of code that direct the computer to produce a certain result, in the paradigm of neural networks (or “software 2.0”, as Andrej Karpathy christened it in 2017), we give the computer some guidelines and data and ask it to determine the optimal result. The optimization happens without humans writing each specific line of code. In fact, according to Karpathy, “the optimization can find much better code than what a human can write.” This is kind of a big deal: the neural network is figuring out the answer/response on its own, without line-by-line guidance from humans. So when we present a prompt to an LLM, the text of the prompt is translated into numerical representations, the underlying neural network model runs the nums and spits out a numerical output, and that output is translated back into text that humans (usually) recognize as language. (This is, of course, a highly simplified explanation).

While there have been important breakthroughs in neural networks over the last few years, as evidenced by the remarkable, “human-like” responses produced by today’s LLMs, we still don’t understand exactly how they work. Yes, the experts have figured out how to develop models that give us seemingly intelligent responses. But Wolfram continually emphasizes that there is no known theoretical reason why a neural network designed for generating text should do such a good job. He writes, “ChatGPT doesn’t have any explicit “knowledge” of [grammatical] rules. But somehow in its training it implicitly “discovers” them—and then seems to be good at following them. So how does this work? At a ‘big picture’ level it’s not clear.” The question of how a neural network generates any particular output is one that many organizations and researchers at the forefront of neural networks are studying, including notably the safety-oriented generative AI company Anthropic.

Since we don’t know the inner workings of why a LLM returns a specific response, and we have seen that a given model will return different responses to the same prompt, there is inherent risk in using today’s LLMs in settings in which predictability and safety are paramount. In this sense, using chatbots to treat patients with mental health conditions is generally viewed as too risky, given the current state of our generative AI tools.

Big tech is eating the (chatbot) world

A workaround that some companies in this space are taking is positioning their generative AI-powered chatbots as “subclinical”, “adjacent”, or “complementary” to clinical care – that is, not specifically intended to treat someone for a clinical diagnosis. Instead, these chatbots are intended to provide companionship or support to people who are lonely or unable to access clinical care, or as a supplement to ongoing clinical care.

These startups typically build their conversational companions by starting with an off-the-shelf foundation model (like GPT-3). They fine-tune the foundation model with mock chats written by contractors (such as therapists), and in some cases they follow on with reinforcement learning, also using contractors. (If terms like finetuning and reinforcement learning are unfamiliar, check out this excellent explainer video by Andrej Karpathy). Key challenges with this approach are that the training data doesn’t come from real human interactions, and it is time-consuming and costly to manually generate. (Some companies in the healthcare space have also tried training generative AI models with synthetic data, but that has come with its own challenges).

In contrast, many big tech companies already have access to huge amounts of data from real chat sessions that have occurred on their platforms – think Apple iMessage, Google Chat, Facebook Messenger. Many of these companies also have or are actively developing the other key components of the tech stack needed to build generative AI applications such as conversational AI assistants. (Meta is already building such assistants).

Not only do these companies have the right inputs, but they also can benefit from launching general-purpose chatbots for a range of use cases – automating customer service, helping creators to create more and better content, driving greater engagement on their own platforms, etc. Such chatbots might meet the bar for a conversational companion for loneliness or mental health support, or could be fine-tuned with mental health-specific data that is manually generated or (depending on data privacy restrictions) potentially sourced from mental health care companies that already interact with patients via chat. Either way, the tech companies are typically starting from a stronger position to develop quality conversational AI products. (Microsoft already offers a tool for healthcare companies seeking to develop their own virtual assistants).

Finally, the big tech companies have massive built-in user bases. For context, the Facebook app crossed 3 billion monthly active users in Q2 2023. Many people turn to Facebook groups to discuss health conditions, whereas patient communities such as PatientsLikeMe have struggled to gain comparable traction (the PatientsLikeMe website states they have over 850,000 users). Similarly, we can expect the big tech companies to achieve greater reach with their conversational AI assistants than startups selling point solutions. Furthermore, these startups are likely to have a difficult time monetizing their products. In a predominantly fee-for-service healthcare system, healthcare providers are typically reluctant to incur added expenses even if they could yield health benefits for patients. Payers may be more interested given the potential downstream cost savings, but most patients won’t want their health insurance company to have access to their personal chats, especially if the chats pertain to their mental health.

Conclusion

It’s hard to see how small mental health startups that lack comparable generative AI infrastructure, expertise, and large volumes of relevant, proprietary data can compete with large tech companies developing conversational AI assistants. The silver lining is that chatbots deployed through big tech will likely be highly accessible and potentially able to help with use cases such as loneliness.

Although this may be a difficult space for startups, there are a number of other applications of AI in mental health care that do seem like promising opportunities. AI-enabled tools can enhance therapist training, automate clinician documentation and other administrative tasks, predict and assess patient outcomes and tailor treatment accordingly, and more. These are less buzzy use cases than chatbots, but they can have real impacts on access, quality, and cost of care. I look forward to seeing how the next generation of mental health care companies can help make our care delivery system at least a little bit better.

Source: MIT Machine Learning for Healthcare course (lecture #1)

As a now semi retired therapist solo practicing chatbots are and never should be integrated into mental healthcare systems at any level. I myself don’t even use the phone systems to self serve at insurance companies. I scream live person please until it happens. https://jochap.substack.com/p/healthcareless-usa?utm_source=profile&utm_medium=reader2